Introduction

In today’s hyper-connected digital world, online platforms have become the go-to spaces for communication, commerce, entertainment, and information sharing. From social media networks to e-commerce sites, forums, and content-sharing apps, these platforms serve billions of users worldwide. However, with such expansive reach and influence comes significant responsibility, particularly the need to ensure that the content shared on these platforms is safe, legal, and appropriate. Moderation of content is crucial in this situation.

Definition

A Content Moderation Solution is a system or service designed to monitor, review, and manage user-generated content on digital platforms to ensure it complies with community guidelines, legal standards, and brand policies. It helps identify and remove inappropriate, harmful, or offensive material – such as hate speech, violence, or explicit content – using a combination of automated tools and human reviewers to maintain a safe and respectful online environment.

What Is Content Moderation?

The practice of examining and controlling user-generated content to make sure it conforms with legal requirements, ethical standards, and platform community norms is known as content moderation. This includes filtering out hate speech, harassment, explicit content, misinformation, spam, and other forms of harmful or inappropriate material.

Moderation can be done in various ways- automated tools, human reviewers, or a combination of both. The goal is to create a secure and trustworthy digital environment for all users.

Why Content Moderation Matters

User Safety and Well-being:

One of the most important reasons for content moderation is to protect users from harmful experiences. Online platforms that lack moderation can quickly become breeding grounds for cyberbullying, harassment, threats, and graphic or disturbing content.

Unchecked content can lead to mental distress, particularly for vulnerable populations such as children and teens. Moderation helps filter harmful content and ensures that users feel safe while interacting with the platform.

Preventing the Spread of Misinformation:

Misinformation and disinformation—whether about health, politics, or public safety—can spread rapidly online and have real-world consequences. During the COVID-19 pandemic, for instance, false medical advice and conspiracy theories created confusion and endangered public health.

Effective moderation policies help detect and mitigate the spread of fake news and misleading content, reinforcing the platform’s credibility and helping users make informed decisions.

Legal Compliance and Risk Management:

Online platforms operate across different countries and jurisdictions, each with its own set of legal requirements regarding online content. Failure to comply with these laws can result in legal penalties, platform bans, or loss of user trust.

Content moderation ensures that the platform adheres to local and international laws concerning hate speech, copyright infringement, terrorism-related content, child exploitation, and more. It also reduces the risk of lawsuits and regulatory sanctions.

Brand Reputation and Trust:

In the digital age, a platform’s reputation can make or break its success. If a site is known for hosting offensive or harmful content, it risks losing users, advertisers, and partners. Brands that advertise on these platforms do not want to be associated with content that contradicts their values.

Strong content moderation signals that a platform takes its community standards seriously. It builds trust among users and helps maintain a positive brand image.

Enhancing User Experience:

Users are more likely to engage and stay on a platform that provides a safe, respectful, and welcoming environment. Effective moderation keeps discussions constructive, reduces spam, and filters out content that might disrupt the user experience.

This is particularly important for online communities and forums where people come to seek advice, share experiences, or collaborate on shared interests.

Supporting Diverse and Inclusive Communities:

Content moderation also plays a pivotal role in fostering inclusivity. Marginalized groups—such as people of color, LGBTQ+ individuals, or people with disabilities—often face targeted harassment online. Without moderation, these voices may be silenced or discouraged from participating.

By proactively addressing hate speech and discrimination, platforms can support diverse communities and ensure that everyone has an equal opportunity to participate.

Challenges in Content Moderation

Despite its importance, content moderation is not without challenges.

- Volume and Scale: Platforms like Facebook, YouTube, and Twitter deal with millions of posts daily. Managing such a massive volume requires advanced automation and significant human resources.

- Context and Nuance: Automated tools may struggle to understand sarcasm, satire, or cultural context, leading to false positives or missed violations. Human moderators, on the other hand, may face emotional stress and burnout from exposure to disturbing content.

- Censorship Concerns: Striking the balance between moderation and free speech is tricky. Over-moderation can lead to accusations of censorship, especially when political or controversial topics are involved.

- Evolving Threats: New forms of harmful content—like deepfakes, AI-generated misinformation, or coded hate speech-continue to emerge, requiring constant updates to moderation strategies.

Best Practices for Effective Moderation

To navigate these complexities, platforms must adopt thoughtful, transparent, and adaptable moderation strategies. Some best practices include:

- Clear Community Guidelines: Define and communicate rules that outline acceptable behavior and content. Make sure they are easy to understand and accessible to all users.

- Hybrid Moderation Models: Combine AI-powered tools with human oversight to strike a balance between efficiency and contextual understanding.

- Transparency and Appeals: Offer transparency into moderation decisions and provide users with a fair appeals process.

- Moderator Support and Training: Provide psychological support and adequate training for human moderators, especially those dealing with high-risk content.

- User Empowerment Tools: Give users the ability to report, block, mute, or filter content according to their preferences.

The Future of Content Moderation

As technology evolves, content moderation will too. Innovations like natural language processing, machine learning, and AI-driven sentiment analysis are already improving the accuracy and efficiency of automated moderation tools. Meanwhile, there’s growing discussion about decentralized moderation models and community-driven moderation, where users have more say in content curation.

Regulatory bodies around the world are also stepping up, proposing stricter rules for how platforms handle user-generated content. The Digital Services Act in the EU and similar regulations elsewhere indicate a shift toward greater accountability for online platforms.

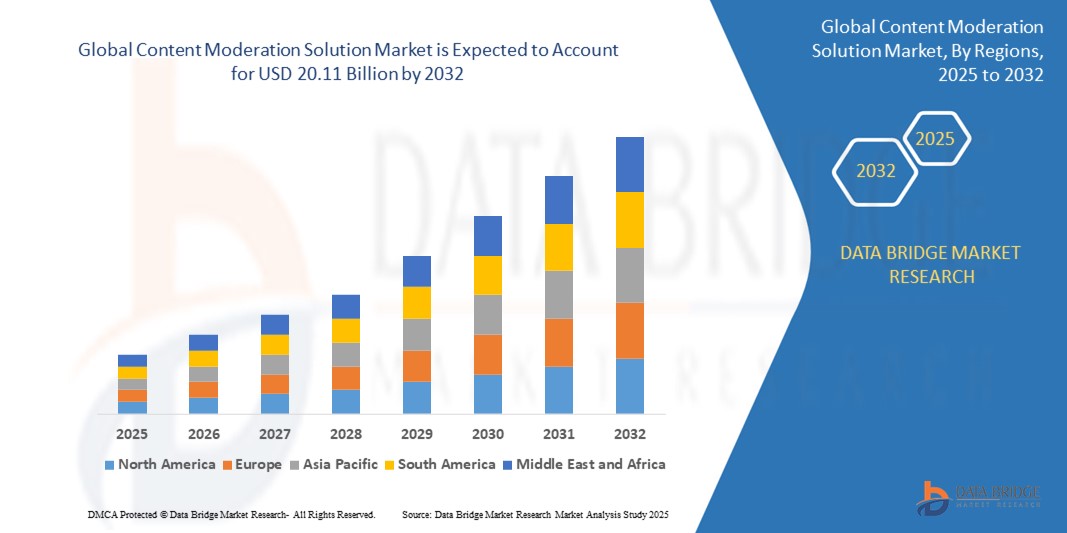

Growth Rate of Content Moderation Solution Market

According to Data Bridge Market Research, the global content moderation solution market is projected to grow from its 2024 valuation of USD 8.54 billion to USD 20.11 billion by 2032. Advances in artificial intelligence and machine learning are expected to propel the market’s growth at a compound annual growth rate (CAGR) of 11.30% between 2025 and 2032.

Read More: https://www.databridgemarketresearch.com/reports/global-content-moderation-solutions-market

Conclusion

Content moderation is no longer an optional feature—it’s a foundational necessity for any online platform. It safeguards users, supports legal compliance, and strengthens brand integrity. As digital spaces become more integral to daily life, investing in responsible and scalable moderation practices is critical to ensuring a safe, inclusive, and engaging online environment for all. In a world where information travels at lightning speed and digital interactions shape real-world outcomes, content moderation stands as a crucial guardian of truth, safety, and community standards. Platforms that recognize and prioritize this responsibility are better positioned to thrive in the digital age.